Are you ready to unpack the secrets of neural networks for machine learning? If you’ve been searching for a guide to find the way in the complexities of this new technology, Welcome – You have now found the perfect article.

We’re here to spell out on the path ahead, making the voyage smoother and more rewarding for you.

Feeling overstimulated by the large possibilities and complexities of neural networks? We understand the frustration of struggling with the subtleties of machine learning. Let’s work hand-in-hand to address your pain points and transform tough difficulties into triumphs. Our skill in this field will serve as a guide, guiding you towards a more understanding and mastery of neural networks.

As experienced experts in the field of machine learning, we’ve explored dense into the inner workings of neural networks. Our mission is to boost you with knowledge and skills that will propel you towards success. Join us on this informative voyage as we investigate the boundless potential of neural networks and pave the way for your growth and achievement.

Key Takeaways

- Neural networks mimic the human brain’s operation with nodes, layers, weights, biases, activation functions, and backpropagation.

- Different types of neural networks include Feedforward, Recurrent, Convolutional, and Generative Adversarial Networks, each serving specific purposes.

- Training neural networks involves data preprocessing, selecting appropriate loss functions, optimization techniques, and validation to improve accuracy.

- Applications of neural networks span across image recognition, natural language processing, medical diagnostics, autonomous vehicles, financial forecasting, and gaming.

- Future trends in neural networks include Graph Neural Networks, Explainable AI, Federated Learning, Quantum Neural Networks, and Continual Learning, shaping the future of machine learning.

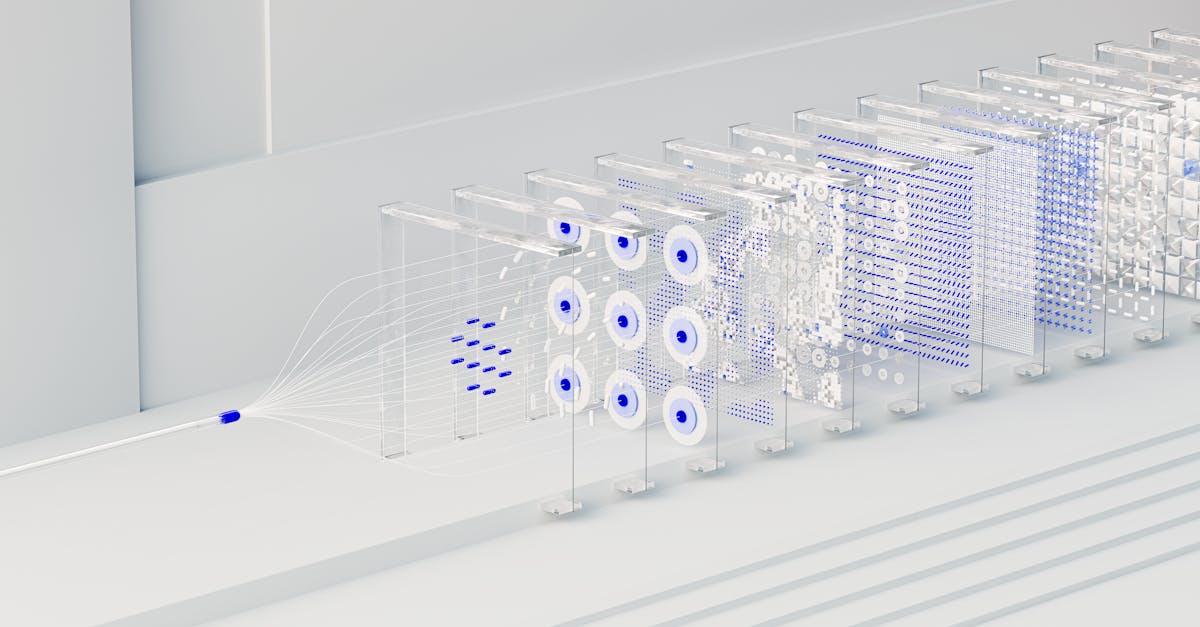

Understanding Neural Networks

When it comes to neural networks, it’s super important to grasp the foundational concepts.

These networks are designed to mimic the way the human brain operates, with interconnected nodes working hand-in-hand to process complex information.

Here are some key points to help you understand neural networks better:

- Nodes: These are like neurons in the brain, receiving inputs, processing them, and passing signals to other nodes.

- Layers: Neural networks are structured in layers, including input, hidden, and output layers, each performing specific functions in the network.

- Weights and Biases: Adjusting these parameters during the training process is critical for the network to learn and improve its accuracy.

- Activation Functions: These functions introduce non-linearities to the network, allowing it to learn complex patterns and make better predictions.

- Backpropagation: This algorithm is used to update the weights and biases by calculating the gradients of the loss function with respect to the network’s parameters.

To investigate more into the complexities of neural networks, you can refer to this full guide Or investigate this interactive tutorial For hands-on experience.

Understanding these core concepts lays a solid foundation for mastering machine learning techniques and using them effectively in various applications.

Types of Neural Networks

When investigating neural networks for machine learning, it’s super important to grasp the various types available.

Each type serves specific purposes and excels in different applications within the field of artificial intelligence.

Here are some common Types of Neural Networks:

- Feedforward Neural Networks: The simplest form where data flows in one direction, from input nodes through hidden nodes to output nodes.

- Recurrent Neural Networks (RNNs): Designed to recognize patterns in sequences of data, making them ideal for tasks like speech recognition and language modeling.

- Convolutional Neural Networks (CNNs): Particularly effective in image and video recognition due to their ability to preserve spatial structure.

- Generative Adversarial Networks (GANs): Comprising two neural networks – a generator and a discriminator – collaborating to produce realistic outputs. They are popular in creating densefakes and generating realistic images.

Exploring the characteristics and applications of each type of neural network lays a solid foundation for mastering machine learning techniques.

For a more in-depth understanding of these networks, check out this full guide from Stanford University on Neural Networks.

Training Neural Networks

When it comes to Training Neural Networks, it’s super important to understand the process thoroughly.

We start by feeding the network with input data, which goes through various layers to produce an output.

During training, the network adjusts its weights based on the error between the predicted output and the actual target.

Here are some key points to keep in mind when training neural networks:

- Data Preprocessing: Before training the network, it’s critical to preprocess the data to ensure it’s in the right format and free from outliers that could affect the training process.

- Loss Function: The choice of a loss function is critical as it measures how well the network is performing. Common loss functions include Mean Squared Error for regression tasks and Cross-Entropy for classification.

- Optimization: Optimizers such as Stochastic Gradient Descent are used to minimize the loss function by adjusting the weights of the network during training.

- Validation: Splitting the data into training and validation sets helps evaluate the model’s performance and prevent overfitting.

For a more in-depth understanding of training neural networks, we recommend checking out this full guide from Stanford University.

Applications of Neural Networks in Machine Learning

When it comes to machine learning, neural networks play a critical role across various applications.

Let’s investigate some key domains where neural networks are extensively used:

- Image Recognition: Neural networks excel in image recognition tasks, enabling systems to identify objects, patterns, and even faces in images with remarkable accuracy.

- Natural Language Processing (NLP): In the field of NLP, neural networks are used for tasks like language translation, sentiment analysis, and chatbot development.

- Medical Diagnostics: Neural networks are being employed in medical diagnostics to assist in identifying diseases from medical images like X-rays and MRIs.

- Autonomous Vehicles: In the automotive industry, neural networks power the decision-making processes of self-driving cars, helping them find the way in and make decisions on the road.

- Financial Forecasting: Neural networks are used in financial institutions for tasks such as predicting stock prices, detecting fraud, and optimizing investment strategies.

- Gaming: Neural networks are integrated into gaming environments for tasks like opponent AI behavior modeling, game environment simulations, and personalized gaming experiences.

For further exploration into the applications of neural networks in machine learning, refer to this detailed guide from Stanford University.

Future Trends in Neural Networks

As technology continues to advance, the future of neural networks in machine learning looks promising.

Here are some key trends that we can expect to see in the evolution of neural networks:

- Graph Neural Networks (GNNs): GNNs are gaining traction for their ability to model complex relationships in data, making them ideal for tasks like social network analysis and recommendation systems.

- Explainable AI: The demand for transparency in AI decisions is driving the development of explainable neural networks, allowing us to understand how these models arrive at specific outcomes.

- Federated Learning: With privacy concerns on the rise, federated learning enables multiple devices to collaboratively train a shared model without sharing raw data, ensuring data security.

- Quantum Neural Networks: The intersection of quantum computing and neural networks is opening up new possibilities for solving complex problems that are past the capabilities of classical computers.

- Continual Learning: Neural networks that can adapt to new data without catastrophic forgetting are on the horizon, paving the way for more flexible and efficient models.

Stay tuned as we investigate these future trends and their implications for the field of machine learning.

For further ideas into the advancements in neural networks, check out the detailed guide provided by Stanford University.

- Do Software Engineers Work on Backend? The Challenges They Face [Don’t Miss These Insights!] - October 24, 2024

- How many hours software engineers work in Amazon? [Discover Amazon’s Work-Life Balance Secrets] - October 24, 2024

- Optimizing Internal Use Software Capitalization: Best Practices Revealed [Must-Read Tips] - October 24, 2024