Are you searching for a powerful tool to improve your regression analysis? In today’s data-driven world, understanding complex relationships between variables is critical.

We know the struggle of deciphering complex patterns and making accurate predictions.

That’s where neural networks come into play, changing the way we approach regression tasks.

Feeling overstimulated by traditional regression methods that fall short in capturing the subtleties of your data? Our skill in neural networks for regression will alleviate those pain points. Say goodbye to limitations and hello to accurate forecasts. With our guidance, you’ll unpack the full potential of your data and make smart decisionss with confidence.

As experts in the field, we are dedicated to enabling you with the knowledge and tools needed to succeed. Our adjusted approach ensures that you, our valued readers, receive the guidance you deserve. Hand-in-hand, we’ll investigate the world of neural networks for regression, clarifying complexities and paving the way for data-driven excellence.

Key Takeaways

- Neural networks are powerful for regression tasks, excelling in capturing complex non-linear relationships between variables.

- Traditional regression methods are more interpretable but struggle with complex relationships, whereas neural networks are considered “black boxes” due to their complex structures.

- Building a neural network model for regression involves steps like data preprocessing, selecting the right designure, training, and evaluating with metrics like Mean Squared Error.

- Training and fine-tuning neural networks for regression require adjusting hyperparameters carefully, using techniques like dropout regularization, and monitoring loss functions for optimal performance.

- Carry outation of neural networks for accurate regressions requires attention to data preprocessing, feature scaling, selecting the right designure, and using regularization techniques to avoid overfitting.

- Optimizing hyperparameters through techniques like Grid Search or Random Search can further improve neural network performance for regression tasks.

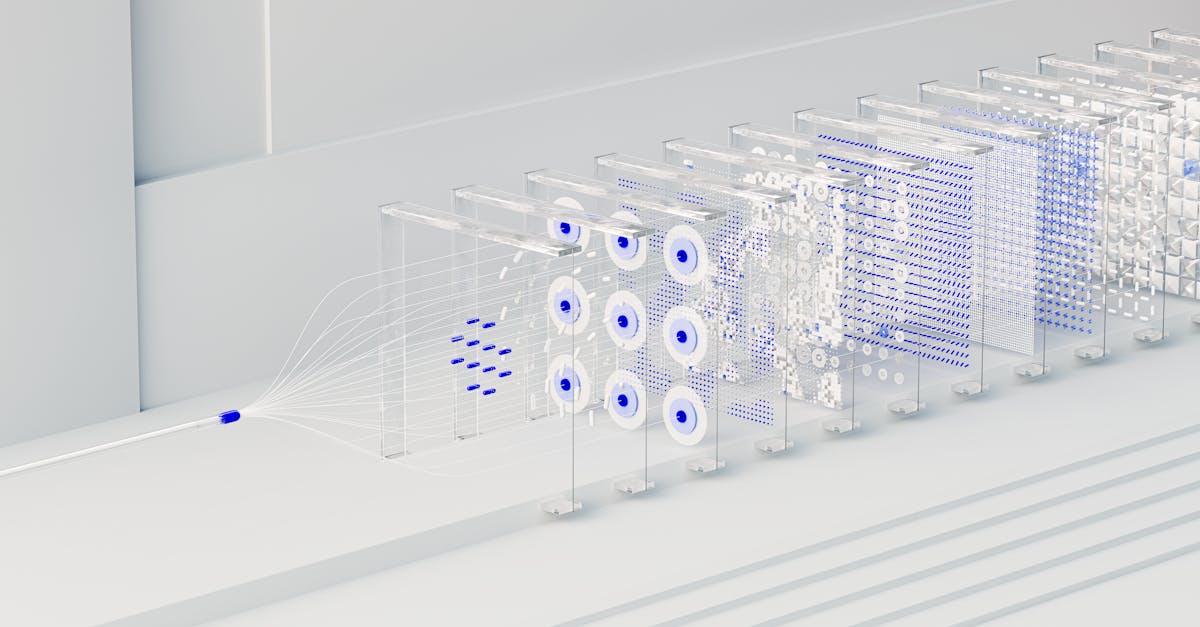

Understanding the Basics of Neural Networks

Neural networks are a key component of machine learning and play a critical role in various applications.

Let’s investigate the basics to grasp their significance:

- Neurons: These are the building blocks that simulate the way the human brain works.

- Layers: Neurons are organized in layers, including an input layer, hidden layers, and an output layer.

- Weights and Biases: Neural networks use these parameters to make sense of data and produce accurate predictions.

- Activation Functions: These introduce non-linear properties to the network, enabling it to learn complex patterns.

When data is fed into a neural network, it passes through the layers, with each layer processing and interpreting the information.

Through a process called back propagation, the network adjusts its parameters to minimize errors and improve accuracy.

To explore more into the complexities of neural networks, refer to this full guide on neural networks.

Now that we’ve covered the basic principles, let’s investigate how neural networks revolutionize regression analysis with their ability to capture complex relationships between variables and deliver exact predictions.

Neural Networks vs. Traditional Regression Methods

When comparing Neural Networks with traditional regression methods, several key changes emerge:

- Complex Relationships: Traditional regression methods work well with linear relationships, while Neural Networks excel in capturing complex non-linear relationships in data.

- Feature Engineering: Traditional regression often requires manual feature engineering, whereas Neural Networks can automatically learn features from the data, reducing human intervention.

- Flexibility:Neural Networks are more flexible and can adapt to various types of data, making them suitable for a wide range of regression tasks past the capabilities of traditional methods.

- Model Interpretability: Traditional regression models are often more interpretable, as they provide ideas into how each feature affects the outcome. Now, Neural Networks are sometimes considered as “black boxes” due to their complex structures.

- Performance: In terms of predictive performance, Neural Networks have shown superior results in handling large datasets with complex patterns compared to traditional regression methods.

Considering these factors, it’s clear that Neural Networks offer a powerful alternative to traditional regression methods in capturing complex relationships and making accurate predictions.

To investigate more into this topic, you can investigate a full comparison on Neural Networks vs. Regression Analysis On DataScienceCentral.

Building a Neural Network Model for Regression

When Building a Neural Network Model for Regression, there are several key steps to follow for optimal results.

Initially, data preprocessing is huge.

Key to normalize or standardize the input features to ensure that the neural network performs efficiently.

Next, selecting the appropriate designure is required.

This includes deciding on the number of hidden layers, neurons per layer, and activation functions.

Each of these choices impacts how well the neural network can capture the complexities of the regression problem at hand.

Also, training the neural network involves iteratively adjusting the weights and biases to minimize the loss function.

This process requires careful tuning of hyperparameters such as the learning rate and batch size to ensure convergence.

When it comes to evaluating the model, metrics like Mean Squared Error (MSE) or Root Mean Squared Error (RMSE) are commonly used to assess how well the neural network is performing on the regression task.

Regular validation checks are critical to prevent overfitting.

For more in-depth guidance on Building a Neural Network Model for Regression, you can refer to this full neural network tutorial That covers the keys and advanced techniques in neural network development.

Training and Fine-Tuning Your Neural Network

When training a neural network for regression, it’s critical to find the right balance in adjusting hyperparameters for optimal performance.

Here are some key steps in the process:

- Initializing Weights: Setting the initial weights of the neural network is a critical starting point.

- Forward Propagation: Calculating the output of the network based on the input data.

- Backward Propagation: Updating the weights of the network based on the calculated error.

- Fine-Tuning: Iteratively adjusting hyperparameters to improve the model’s accuracy and reduce errors.

To improve performance, we recommend using techniques like dropout regularization to prevent overfitting and learning rate scheduling to improve convergence.

Regular monitoring of loss functions and validation metrics helps in assessing the model’s progress.

Also, conducting hyperparameter tuning through techniques like Grid Search or Random Search can further optimize the neural network for regression tasks.

It’s super important to find the right balance between under fitting and overfitting to achieve the best results.

For more detailed ideas into training neural networks and fine-tuning techniques, refer to this full neural network tutorial.

Putting in place Neural Networks for Accurate Regressions

When Putting in place Neural Networks for Accurate Regressions, it’s super important to pay close attention to data preprocessing.

Ensuring that our dataset is clean, normalized, and well-structured sets a strong foundation for training a high-performing model.

Feature scaling is another critical aspect to consider.

By scaling our input features, we can prevent certain features from dominating the learning process, leading to more balanced model training.

To add, choosing the right designure for our neural network plays a key role in achieving accurate regression results.

We need to determine the number of hidden layers, the number of neurons in each layer, and the activation functions that best suit our specific regression task.

Regularization techniques, such as L1 and L2 regularization, can help prevent our model from overfitting, ensuring that it generalizes well to unseen data.

Also, optimizing hyperparameters through techniques like Grid Search or Random Search fine-tunes our model for optimal performance.

To investigate more into this topic, you can investigate more about neural network designure design for regression tasks.

After all, with the right approach and techniques, we can build highly accurate neural networks for regression tasks.