Are you looking to unpack the secrets behind neural network algorithms? Jump into our article as we guide you through understanding the complex workings of these algorithms, clarifying the complexities along the way.

Feeling overstimulated by the technical jargon and complexities of neural network algorithms? We understand the frustration of struggling with these concepts. Let us be your guiding light, explained on the pain points you’re facing and helping you find the way in through them with ease.

With our years of experience and skill in the field, we’re here to provide you with useful ideas and in-depth knowledge that will boost you to grasp neural network algorithms effortlessly. Trust us to equip you with the tools and understanding you need to conquer this challenging subject.

Key Takeaways

- Algorithms in neural networks adjust synaptic weights and biases through backpropagation for iterative learning and improvement.

- Neural networks process data via interconnected nodes arranged in layers: input, hidden, and output.

- Different algorithms like Backpropagation, CNNs, RNNs, and GANs play a significant role in neural network functionality.

- Core concepts such as activation functions, gradient descent, overfitting, underfitting, loss functions, and hyperparameters are key in understanding neural network algorithms.

What is an Algorithm?

An algorithm is a set of rules or instructions designed to solve a specific problem by performing a series of defined actions. In the field of neural networks, algorithms play a critical role in processing data and making predictions.

In the context of neural network algorithms, the algorithm functions by adjusting synaptic weights and biases through a process known as back propagation.

This iterative process allows the network to learn from its mistakes and improve its accuracy over time.

One key aspect of neural network algorithms is their ability to adapt and optimize themselves based on the data they process.

This adaptive learning sets them apart from traditional algorithms and enables them to tackle complex tasks with remarkable efficiency.

To investigate more into the complexities of algorithms and their significance in neural networks, check out this insightful resource on Algorithm Design For a detailed exploration of algorithmic principles.

After all, understanding the core concepts of algorithms is critical to grasping the underlying mechanisms of neural networks and useing their full potential.

Understanding Neural Networks

Neural networks are computing systems inspired by the human brain’s structure and function.

- Consisting of interconnected nodes that work hand-in-hand to process complex information and detect patterns, they excel in tasks like image and speech recognition.

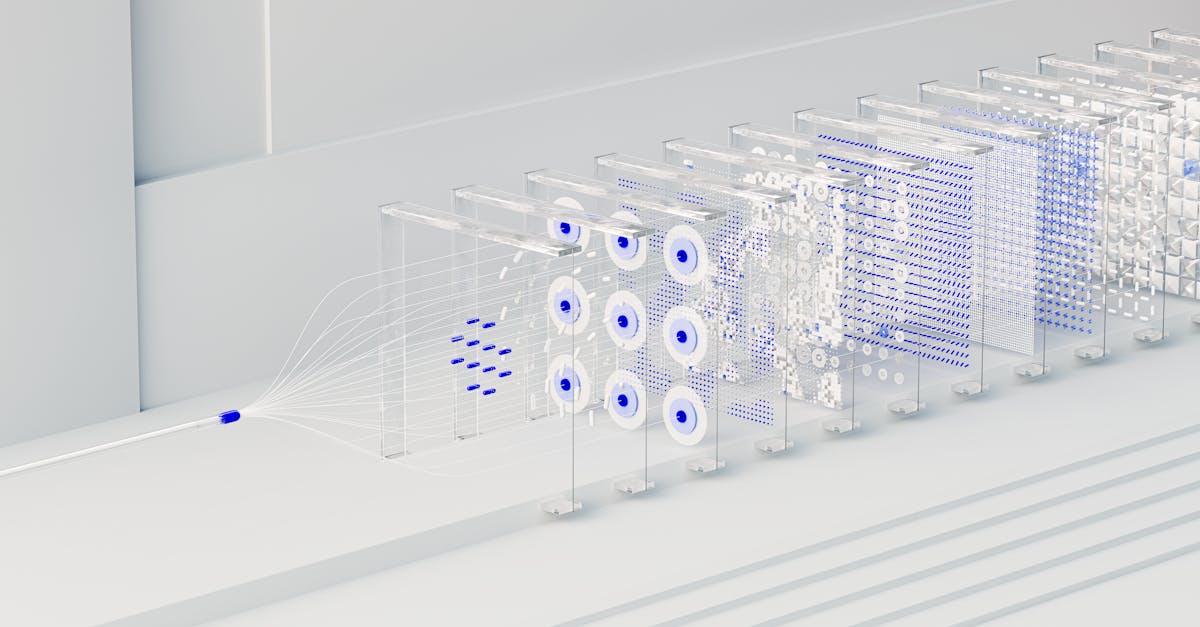

- The nodes in a neural network are arranged in layers, with each layer contributing to the extraction of specific features from the data.

- The input layer receives data, the hidden layers process it, and the output layer provides the final result.

- By training on labeled datasets, neural networks learn to make accurate predictions and classifications, continuously improving their performance through iterations.

A thorough knowledge of neural networks is required for optimizing AI applications.

To investigate more into this topic, check out this full guide to neural networks By experts in the field.

Later, we will investigate the various types of neural networks and their only applications in different industries.

Types of Algorithms for Neural Networks

When it comes to neural networks, different algorithms play a significant role in their functionality.

Understanding these algorithms is critical for useing the full potential of neural networks across various industries.

Below, we highlight some key algorithms commonly used in neural networks:

- Backpropagation:

- Key algorithm for training neural networks.

- Adjusts the weights of connections to minimize errors between predicted and actual outcomes.

- Convolutional Neural Networks (CNNs):

- Specialized for processing grid-like data, such as images.

- Use convolutional layers to detect patterns within the input data.

- Recurrent Neural Networks (RNNs):

- Ideal for sequential data and time series analysis.

- Incorporate loops that allow information to persist, important for tasks like speech recognition.

- Generative Adversarial Networks (GANs):

- Comprise two neural networks, the generator, and the discriminator, in a competitive setting.

- Used for tasks like generating realistic images and improving data.

To investigate more into neural network algorithms and their applications, check out this full guide on neural network algorithms.

After all, the choice of algorithm is key in determining the efficiency and effectiveness of a neural network in solving specific tasks.

Key Concepts to Grasp

When investigating the area of neural networks, critical to understand key concepts that form the backbone of their operations.

Let’s investigate some key principles that are critical for understanding the inner workings of algorithms in neural networks.

- Activation Functions: These functions determine the output of a neural network. Understanding various activation functions such as ReLU, Sigmoid, and Tanh is important for shaping the behavior of neurons in the network.

- Gradient Descent: A key optimization algorithm used to minimize errors in neural network training. It involves adjusting weights and biases iteratively to reach the optimal model parameters.

- Overfitting and Underfitting: Balancing model complexity is critical to prevent overfitting (model memorizing training data) or underfitting (model oversimplifying data).

- Loss Functions: These functions quantify the not the same between predicted and actual values during training. Common loss functions include Mean Squared Error (MSE) and Cross-Entropy Loss.

- Hyperparameters: Parameters that define the structure of the neural network and affect its learning process. Examples include learning rate, batch size, and number of hidden layers.

Curating a solid understanding of these key concepts lays a strong foundation for exploring the complex world of neural network algorithms.

To explore more into the technical details of neural network algorithms, check out this full guide on Understanding Neural Network Algorithms.

- Setting Up Microphones with Elgato Wave Software: Compatibility Guide [Must-Read] - October 26, 2024

- Do I need to download software to use Wacom? [Discover the Essential Steps] - October 25, 2024

- How to use Baofeng CHIRP Software [Master the Programming Process] - October 25, 2024