GPT-3 is a powerful natural language processing tool that has been making waves throughout the internet.

While it’s easy to get started with, as you explore GPT-3 deeper, common questions begin to arise.

One is if GPT-3 is deterministic or stochastic.

Though GPT-3 is naturally stochastic, it can also be easily modified to be deterministic by lowering our temperature within our text completion to 0.

If that sounds complicated, don’t worry; we’ll dive deep into making GPT-3 deterministic with some python code below and discuss the pros and cons of this approach.

You’ll also find out which tasks are best suited for a deterministic model and which should employ a stochastic approach.

By the end of this post, you should better understand how GPT-3 works under the hood and how to create incredible text responses.

Deterministic Machine Learning Models

Deterministic machine learning models are those that don’t have any randomness or chance involved.

They always produce the same outcome when given the same input, almost as if their results are formulaic.

For example, think of a simple function like 1x + 5 = Y, where the same x will always give you the same y.

These machine-learning models are more reliable and easier to trust since they can consistently produce accurate results without surprises.

Furthermore, deterministic models can be easily tweaked and adjusted with some coding knowledge.

These changes are usually easier to understand and reverse engineer due to their repeatability.

However, there are some downsides.

Due to their concrete nature, they do not handle complex tasks as efficiently as non-deterministic models.

This makes sense, as the outside world is rarely absolute, and modeling it that way will usually cost you some accuracy.

Is GPT-3 Deterministic?

GPT-3’s initial parameters make the GPT-3 model stochastic, meaning that it will produce random outputs based on the input given – even if that input is the same.

This allows for a much more dynamic model and produces results that are difficult to predict.

However, if you have a specific result you would like to achieve; it is possible to make GPT-3 deterministic.

This is done by setting the temperature value to 0 in the temperature parameter.

This will ensure that GPT-3 always produces the same output when given the same input data.

While this can be useful in some cases, it should be noted that utilizing this approach will limit the model’s ability to explore new possibilities and can lead to less optimal outcomes (boring outcomes) than if left unaltered.

GPT-3 Deterministic Example

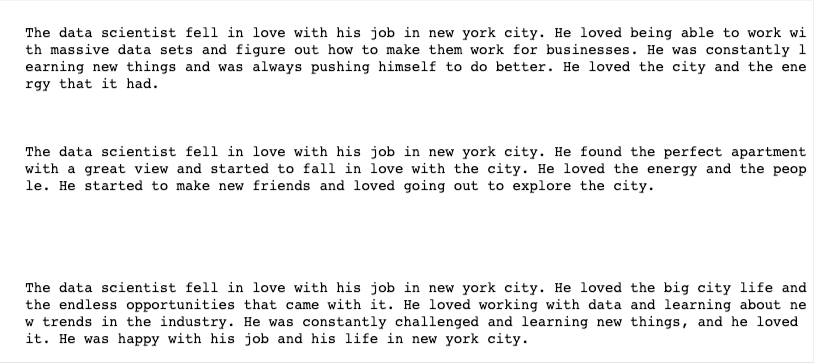

Below, we run this loop 3 times, with our temperature value set to .7, and see the responses below.

p = f'''Write a quick paragraph about a data scientist

who fell inlove with his job in new york city'''

for x in range(3):

# generate the response

response = openai.Completion.create(

engine="davinci-instruct-beta-v3",

prompt=p,

temperature=.7,

max_tokens=500,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=["12."]

)

# grab our text from the response

text = response['choices'][0]['text']

print(text+'\n')

We quickly notice that there is some randomness in our responses. Using this temperature, our model is stochastic.

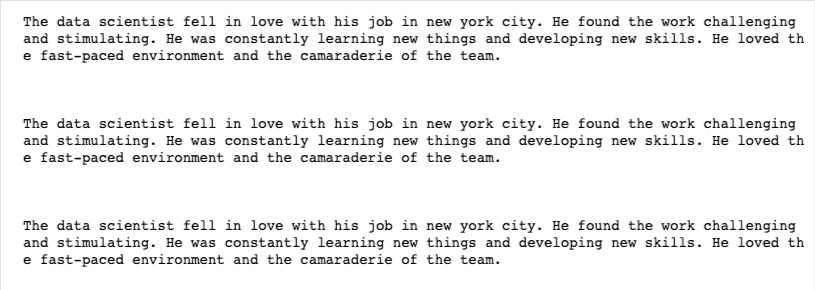

Now, let’s try this same prompt with our temperature value set to 0.

p = f'''Write a quick paragraph about a data scientist

who fell inlove with his job in new york city'''

for x in range(3):

# generate the response

response = openai.Completion.create(

engine="davinci-instruct-beta-v3",

prompt=p,

temperature=0,

max_tokens=500,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=["12."]

)

# grab our text from the response

text = response['choices'][0]['text']

print(text+'\n')

With our temperature set to 0, our model becomes deterministic. We see that we received the same response 3 times.

Can you train GPT-3 to be deterministic?

While fine-tuning GPT-3 can help reduce variability and randomness, it won’t be able to remove its randomness completely.

The only way to make GPT-3 deterministic is by setting the temperature parameter to 0.

This ensures that output remains the same every time you query GPT-3 for an answer to the same input.

However, this comes at a cost, as setting the temperature to 0 removes some of its ability to generate creative responses or explore different possibilities.

Instead, it gives out very rigid answers with slight variation and exploration.

Therefore, while setting the temperature parameter to 0 can make GPT-3 deterministic, it should be done only when necessary, as doing so will reduce the model responses’ overall effectiveness.

Why GPT-3 works better as a Stochastic Model

Stochastic Models are better when things aren’t formulaic.

This makes it ideal for creative applications such as natural language processing, image generation (GANs), and machine translation.

These models shine in these domains because there isn’t always a single correct answer.

Natural language is highly contextual, and there are many ways to say the same thing.

Think about how “yeah, I’m fine” can mean two opposite things based on the context and the expression when being said.

GPT-3’s stochastic model allows for this flexibility and creativity, making it an excellent choice for natural language projects.

Are Any Neural Networks Deterministic?

Tons of neural networks are deterministic.

For example, consider the standard architecture of Convolutional Neural Networks (CNNs) used for image recognition.

These models repeatedly give you the same label when presented with the same image.

This is because single softmax functions at the end of neural networks usually lead to deterministic outputs.

Therefore, if you wanted to re-do GPT-3 to be deterministic, it would likely require a much simpler output function where we do not allow variability from our temperature value.

Other Articles In Our GPT-3 Series:

GPT-3 is pretty confusing, to combat this, we have a full-fledged series that will help you understand it at a deeper level.

Those articles can be found here:

- Does Gpt-3 Plagiarize?

- Stop Sequence GPT-3

- GPT-3 Vocabulary Size

- Is GPT-3 Self-Aware?

- GPT-3 For Text Classification

- GPT-3 vs. Bloom

- GPT-3 For Finance

- Does GPT-3 Have Emotions?