Seeing what features are most important in your models is key to optimizing and increasing model accuracy.

Feature importance is one of the most crucial aspects of machine learning, and sometimes how you got to an answer is more important than the output.

Below we’ll go over how to determine the essential features in your neural networks and other models.

Finding the Feature Importance in Keras Models

The easiest way to find the importance of the features in Keras is to use the SHAP package. This algorithm is based on Professor Su-In Lee’s research from the AIMS Lab. This algorithm works by removing each feature and testing how much it affected the outcome and accuracy. (Source, Source)

Using SHAP with Keras Neural Network (CNN)

Remember, before using this package; we will need to install it.

pip install shap

Now that our package is installed, we can use SHAP to figure out which features are most important.

import shap

import numpy as np

letters = trainning_data[np.random.choice(trainning_data.shape[0], 100, replace=False)]

This step will assume you’ve already designed your model.

Since this function works by removing features one at a time, you’ll need a finished model before SHAP can test all of your features.

explain = shap.DeepExplainer(model, letters)

our_values_for_shap= explain.shap_values(test_data[1:5])

shap.image_plot(our_values_for_shap, -test_data[1:5])

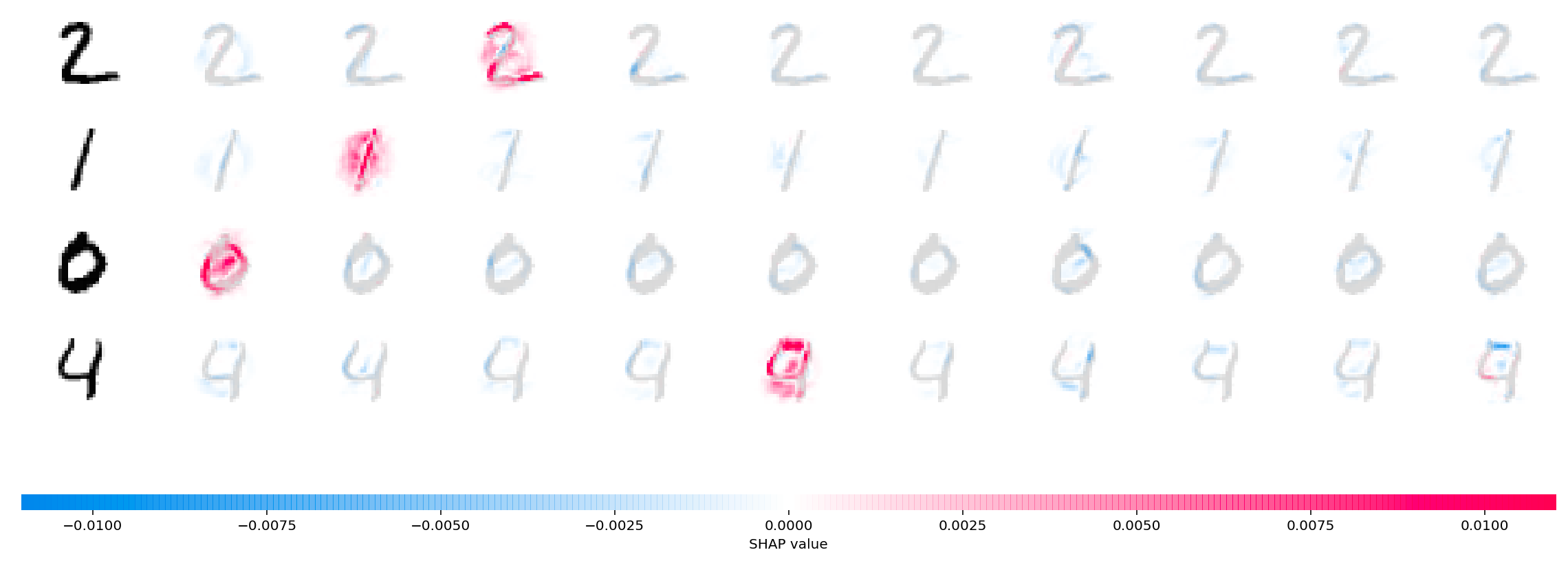

Explaining a Keras SHAP output

This will result in an image like the one displayed here. (Source)

This image may seem overwhelming, but this answer is helpful.

To explain this, we will start with the four. On the left, we see the bolded four, signifying what a “4” actually looks like.

Blue values hurt the model in terms of accuracy; for example, the second four from the left have a bit of blue at the bottom; if those pixels are present in our hand drawing, they will decrease our accuracy at predicting “4”.

This makes total sense; if there is a curve at the bottom of the four, the number will look much more like a 0 or a 6.

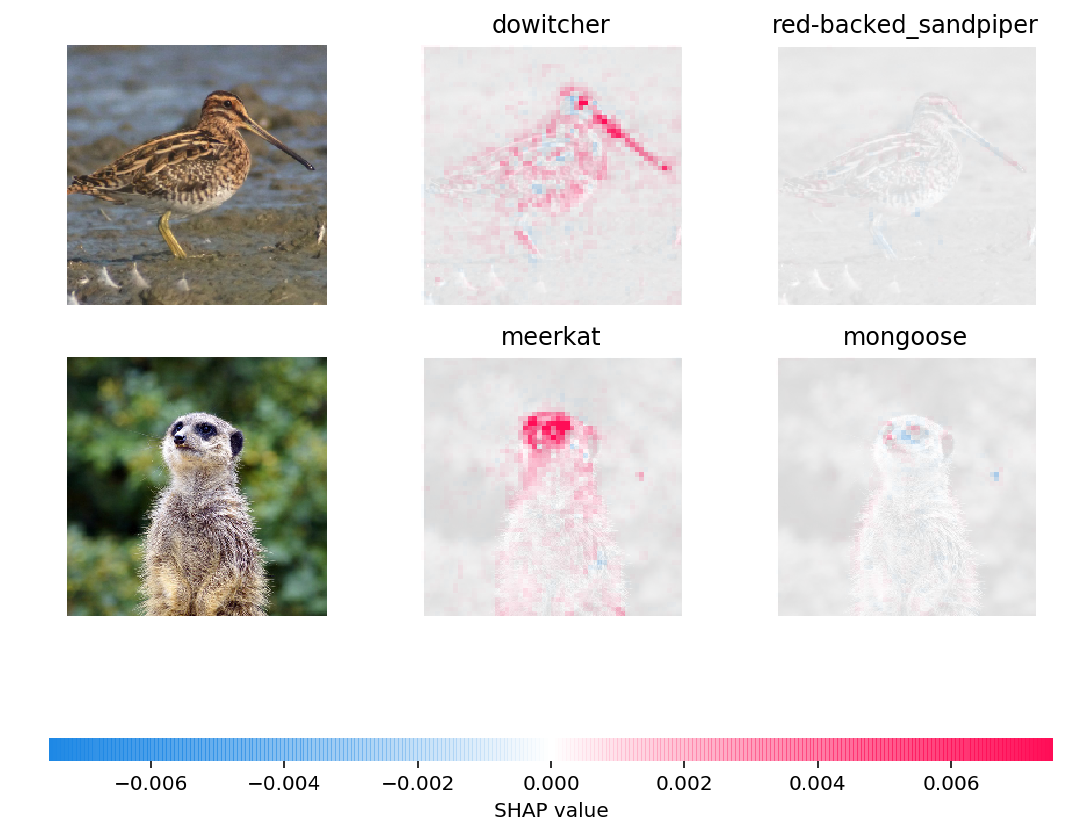

Another example (Source)

This one is much more obvious, and sticking with our colors before, we quickly notice that our model uses the eyes of the meerkat to accurately predict a specific image as a meerkat and uses the beak of a to predict the dowitcher.

This makes sense, as Meerkats have a distinct face region and lack a beak.

Starting your model correctly is how you have success during modeling, we will teach you how in Keras Input Shape.

Some others, like Keras Shuffle, are also super important for modeling accuracy.

What is PCA Used For?

Principal component analysis (PCA) reduces the number of dimensions in your data.

This could take data with 10,000+ columns (variables) down to just two linear combinations (eigenvectors), representing the original 10,000 column dataset.

You could use these linear combinations to model and still see similar accuracies to original modeling techniques, as long as the linear combinations from your projections account for a high amount of variance.

We get a ton of questions about how to know steps per epoch in Keras and we go over it in-depth in that linked article.

PCA For Feature Selection

Feature selection is tough, but some other tools besides SHAP can help you extract the most important data from your training data.

Here’s a unique situation to think through, and it indeed shows the importance of PCA and how reducing your data down into smaller dimensions can help you extract the most essential features.

Suppose you had a super lean dataset with only 1200 rows.

Right away, we know this is a very small dataset, but there is no way that you will be able to get more data.

Let’s also say that you have 2000 columns, which all have predictability and are equally important columns.

We’re now in the data scientist’s worst spot, where we have more variables (dimensions) than rows of data.

We’re going to assume some tricks like columns swapping (where your columns become your rows) aren’t available.

When the number of predictors (your dimensions) is greater than the number of columns, models have difficulty finding unique solutions based on the column make-up.

Without going too far, your models will probably be inadequate and inaccurate.

This is where PCA comes in.

Imagine now that we perform our PCA; we notice that we can cut our 2000 columns down to about 15 eigenvectors while maintaining about 95% of the variance.

We now have our 15 features!

We will build a model over these 15 eigenvectors and use this model for testing and production.

Dense Layer is fundamental in machine learning and is something you should probably check out.

Using PCA in Production Systems

One common missed thing is if you train a model on PCA, every other input into that model, whether it’s validation, testing, or production, must also pass through the same PCA process.

People will often perform PCA on their dataset and train up a model utilizing the eigenvectors, but in production, they try to feed regular inputs through it.

This obviously won’t work, as our model is expecting 15 eigenvectors, and if you were to push through the original 2000 variables, our model wouldn’t even know how to take it.

How does PCA work in Keras?

PCA in Keras works exactly how PCA works in other packages, by projecting your dataset into a different subspace with vectors that maximize the variance in the dataset.

The amount of variance that these vectors hold is the eigenvalues.

This is why we always filter our eigenvalues in order from greatest to least, as we want the features that explain the most (variance) from our dataset.

Difference between PCA and SHAP

The easiest way to break this down is by keeping it simple.

SHAP is used after a model is already built to see what features are most important and what impact they have on the outcome

PCA is used before a model is built to reduce the dimensions of your dataset into an advantageous situation.

Mathematically, SHAP and PCA are not similar one bit and do not rely on the same techniques to get to their destinations.

SHAP is an iterative approach, trying out a model feature by feature to understand its significance, while PCA is a dataset-wide approach, computing eigenvectors from the covariance matrix between each of the features.

In PCA, the final eigenvectors will have little to no resemblance to the original features, as these new features were computed from the covariance matrix of features.

When to use PCA and when to use SHAP

If you currently have a model that ran and is showing great accuracy on out-of-sample tests, you should use SHAP to understand what features are more critical and to get a better understanding of the features in the model.

Suppose you are running into trouble during the modeling process like your data is too big or you have more columns than rows.

In that case, you should use PCA to transform your dataset into significant eigenvectors that could prove advantageous to the modeling process.

The last use of PCA over SHAP is charting. As humans, once we move past three dimensions (3 columns), it becomes impossible to chart our data.

If we used PCA to reduce our data down into 2-3 dimensions where we could now plot it, this might allow us and our teammates to see insights in the data that initially weren’t visible.

- Debug CI/CD GitLab: Fixes for Your Jobs And Pipelines in Gitlab - July 1, 2025

- Why We Disable Swap For Kubernetes [Only In Linux??] - July 1, 2025

- Heuristic Algorithm vs Machine Learning [Well, It’s Complicated] - June 30, 2025